Reference¶

https://www.kaggle.com/bittlingmayer/amazonreviews/home

http://blog.conceptnet.io/posts/2017/how-to-make-a-racist-ai-without-really-trying/

http://mlg.ucd.ie/datasets/bbc.html

https://en.wikipedia.org/wiki/Sentiment_analysis

https://machinelearningmastery.com/prepare-text-data-deep-learning-keras/

https://skymind.ai/wiki/bagofwords-tf-idf

https://www.tensorflow.org/tutorials/representation/word2vec

https://skymind.ai/wiki/word2vec

http://ruder.io/word-embeddings-1/

Mikolov, T., Corrado, G., Chen, K., & Dean, J. (2013). Efficient Estimation of Word Representations in Vector Space. Proceedings of the International Conference on Learning Representations (ICLR 2013), 1–12. ↩︎

Mikolov, T., Chen, K., Corrado, G., & Dean, J. (2013). Distributed Representations of Words and Phrases and their Compositionality. NIPS, 1–9. ↩︎

What is Sentiment Analysis¶

Sentiment analysis is ubiquitous, and it is a classification technique based on natural language processing. The main problem to be solved is to judge whether a sentence (or an article) is positive or negative.

Why we need Sentiment Analysis¶

Dealing with sentiment analysis can greatly improve people's understanding of things, and can also use the conclusions of sentiment analysis to serve other people or things.

For example, government can understand citizens' sentiment towards popular events. Therefore, they are able to grasp the public opinion orientation, and conduct public opinion monitoring in a timely and effective manner.

In this tutorial, we will mainly focus on¶

+Prepare Text Data for Deep Learning with Keras¶

1. Tokenize Words

2. The feature of the document

3. Word Embedding.

--Continuous Bag of Words Model (CBOW)

--Skip-gram

+Semantic analysis based on dictionary (Traditional way).¶

+Semantic analysis based on deep learning¶

1. Sequence processing with RNN(LSTM)

2. Sequence processing with 1-d Convolution OK! Let's start our tutorial!¶

from IPython.display import Image

from IPython.core.display import HTML

from keras.models import Model, Sequential

from keras.layers import Dense, Embedding, Input, Conv1D, GlobalMaxPool1D, Dropout, concatenate, Layer, InputSpec, CuDNNLSTM

from keras.preprocessing import text, sequence

from keras.callbacks import EarlyStopping, ModelCheckpoint

from keras import backend as K

from keras import activations, initializers, regularizers, constraints

from keras.utils.conv_utils import conv_output_length

from keras.regularizers import l2

from keras.constraints import maxnorm

import numpy as np

import pandas as pd

import os,shutil

import warnings

Image(url="https://images-na.ssl-images-amazon.com/images/I/41pih9cD1-L._SX355_.jpg", width=400, height=400)

1.Prepare Text Data for Deep Learning with Keras¶

1.1 Tokenize Words¶

Tokenization is a way to split text into tokens. These tokens could be paragraphs, sentences, individual words or even characters.

Most of the time we split text into words

https://machinelearningmastery.com/prepare-text-data-deep-learning-keras/

docs = [' test Tokenize Words!',

' test This is the corpus~~~~~',

'test Use Kears Tokenize?!@#',

'test test test test...',

' ']

from keras.preprocessing.text import Tokenizer

t = Tokenizer()

# fit the tokenizer on the documents

t.fit_on_texts(docs)

# Once fit, the Tokenizer provides 4 attributes

# that you can use to query what has been learned about your documents:

print(t.word_counts) # A dictionary of words and their counts.

print(t.document_count) # A dictionary of words and how many documents each appeared in.

print(t.word_index) # A dictionary of words and their uniquely assigned integers.

print(t.word_docs) # An integer count of the total number of documents that were used to fit the Tokenizer.

docs = [' test Tokenize Words!',

' test This is the corpus~~~~~',

'test Use Kears Tokenize?!@#',

'test test test test...',

' ']

encoded_docs = t.texts_to_matrix(docs, mode='count')

print('test', 'tokenize', 'words', 'this' , 'is', 'the', 'corpus', 'use', 'kears')

print(encoded_docs)

1.2.2 TF IDF bag-of-words¶

Term-frequency-inverse document frequency (TF-IDF) is another way to judge the topic of an article by the words it contains. With TF-IDF, words are given weight – TF-IDF measures relevance, not frequency. That is, wordcounts are replaced with TF-IDF scores across the whole dataset.

Higher weight means this word is more important. The intuition behind this is that assume a certain word appears a lot in a document,and it always appears in other documents. We can not say this word is important since it is so common. So it should be given lower weight.

Image(url= "https://skymind.ai/images/wiki/tfidf.png",width=400, height=400)

Example:

Consider a document containing 100 words wherein the word cat appears 3 times. The term frequency (i.e., tf) for cat is then (3 / 100) = 0.03. Now, assume we have 10 million documents and the word cat appears in one thousand of these. Then, the inverse document frequency (i.e., idf) is calculated as log(10,000,000 / 1,000) = 4. Thus, the Tf-idf weight is the product of these quantities: 0.03 * 4 = 0.12.

docs = [' test Tokenize Words!',

' test This is the corpus~~~~~',

'test Use Kears Tokenize?!@#',

'test test test test...',

' ']

encoded_docs = t.texts_to_matrix(docs, mode='tfidf')

print(' test', ' tokenize', ' words', ' this' , ' is', ' the', ' corpus', ' use', ' kears')

print(encoded_docs)

Image("img/re.png")

1.3 Word Embedding.¶

Word embedding is one of the most popular representation of document vocabulary. It is capable of capturing context of a word in a document, semantic and syntactic similarity, relation with other words, etc.

Word embeddings refer to dense representations of words in a low-dimensional vector space

Why Learn Word Embeddings?¶

Image(url="https://www.tensorflow.org/images/audio-image-text.png", width=850)

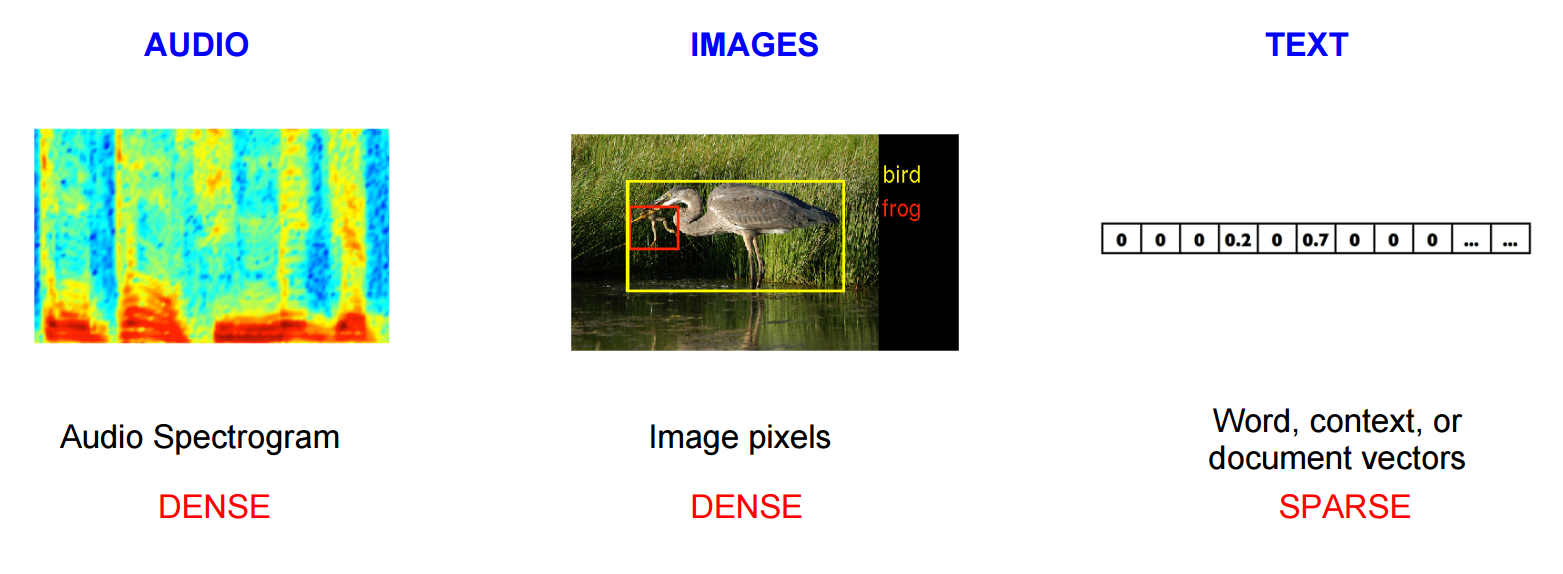

Image and audio processing systems work with rich, high-dimensional datasets encoded as vectors of the individual raw pixel-intensities for image data, or e.g. power spectral density coefficients for audio data. However, natural language processing systems traditionally treat words as discrete atomic symbols

Vector space models (VSMs) represent (embed) words in a continuous vector space where semantically similar words are mapped to nearby points ('are embedded nearby each other').

word2vec(most popular word embedding model): two-layer neural net that processes text¶

Turns text into a numerical form that deep nets can understand

Word2vec is similar to an autoencoder, encoding each word in a vector, but rather than training against the input words through reconstruction, word2vec trains words against other words that neighbor them in the input corpus.

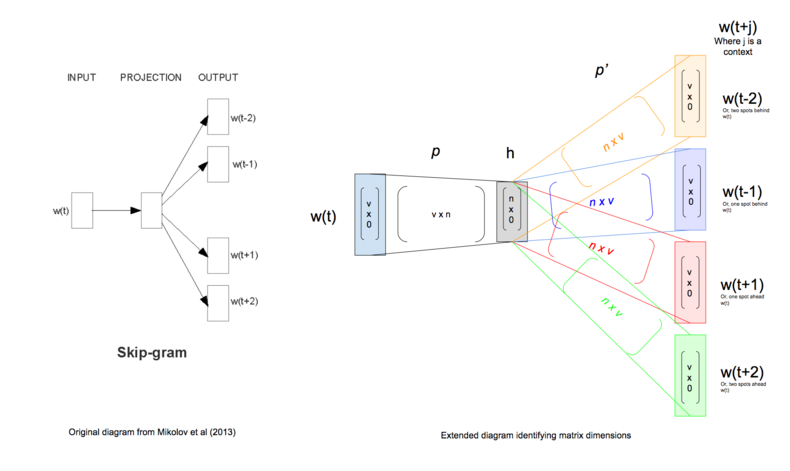

It does so in one of two ways, either using context to predict a target word (a method known as continuous bag of words, or CBOW), or using a word to predict a target context, which is called skip-gram. In practice we usually use the latter method because it produces more accurate results on large datasets.

Image(url="https://skymind.ai/images/wiki/word2vec_diagrams.png", width=600)

Extent reading---CBOW VS Skip-gram: http://cs224d.stanford.edu/lecture_notes/notes1.pdf

Skip-gram https://medium.com/district-data-labs/forward-propagation-building-a-skip-gram-net-from-the-ground-up-9578814b221

Why Skip-gram works better than CBOW: https://stats.stackexchange.com/questions/180548/why-is-skip-gram-better-for-infrequent-words-than-cbow

Continuous Bag of Words Model (CBOW): uses context to predict a target word¶

Maximize the log probabilities of the target word given by the surrounding words.

$J_\theta = \frac{1}{T}\sum\limits_{t=1}^T\ \text{log} \space p(w_t \: | \: w_{t-n} , \cdots , w_{t-1}, w_{t+1}, \cdots , w_{t+n})$

Image(url="https://i.stack.imgur.com/NEbGx.png", width=350)

Image("img/c1.png")

Assume our $x$ is 1000-d, we want to embed the words to 50-d, the $V$ will be a $(50 ,1000)$ matrix

Image("img/c2.png")

$U$ here is a (1000,50) matrix.

Skip-gram:uses the centre word to predict the surrounding words¶

objective thus sums the log probabilities of the surrounding n words to the left and to the right of the target word to produce the following objective:

$J_\theta = \frac{1}{T}\sum\limits_{t=1}^T\ \sum\limits_{-n \leq j \leq n , \neq 0} \text{log} \space p(w_{t+j} : | : w_t)$

Image(url="https://cdn-images-1.medium.com/max/800/0*xqhh7Gd64VAQ1Sny.png", width=550)

We must learn P and P' (through backpropagation ). When we finish, we call the P embedding matrix

Training Processing¶

Let's assume our context is +- 2.

- We generate our one hot input vector $W(t)$

- We get our embedded word vectors for the context $h=P W(t)$

- We get $W(t-2)= hP' $

- Turn each of the scores into probabilities, $\hat y = softmax(W(t-2))$

- compare with ground truth and do backpropagation

- input vector $W(t)$ again and get $W(t-1)$

.........

More tricks please read the original paper: https://papers.nips.cc/paper/5021-distributed-representations-of-words-and-phrases-and-their-compositionality.pdf

visualization of word embedding¶

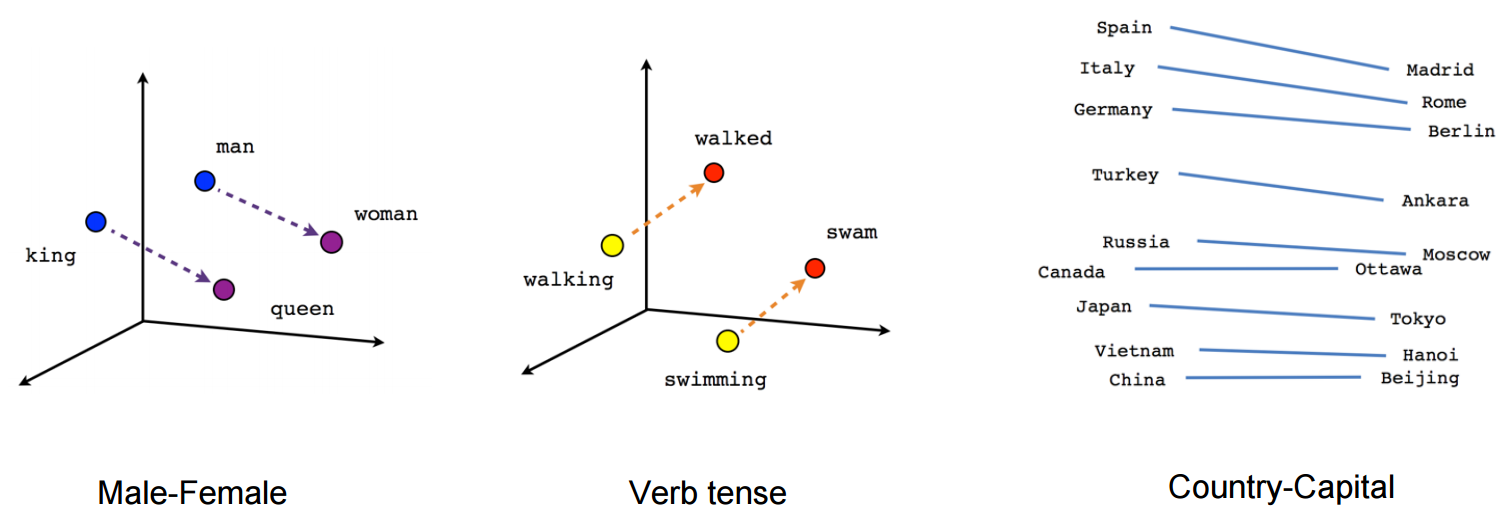

Image(url="https://www.tensorflow.org/images/linear-relationships.png", width=800)

We can visualize the learned vectors by projecting them down to 2 dimensions using for instance something like the t-SNE dimensionality reduction technique. When we inspect these visualizations it becomes apparent that the vectors capture some general, and in fact quite useful, semantic information about words and their relationships to one another!

Results looks good! But word embedding has a big shortcoming!¶

2. Semantic analysis based on dictionary and the defect of word embedding¶

More detail please look at: http://blog.conceptnet.io/posts/2017/how-to-make-a-racist-ai-without-really-trying/

Let's make a sentiment classifier!

import numpy as np

import pandas as pd

import matplotlib

# import seaborn

import re

# import statsmodels.formula.api

from sklearn.linear_model import SGDClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

We talked about word2vec. But this time we try GloVe, pretrained on the Common Crawl of web pages.

We download glove.42B.300d.zip from the GloVe web page, and extract it into data/glove.42B.300d.txt. Next we define a function to read the simple format of its word vectors.

def load_embeddings(filename):

"""

Load a DataFrame from the generalized text format used by word2vec, GloVe,

fastText, and ConceptNet Numberbatch. The main point where they differ is

whether there is an initial line with the dimensions of the matrix.

"""

labels = []

rows = []

with open(filename, encoding='utf-8') as infile:

for i, line in enumerate(infile):

items = line.rstrip().split(' ')

if len(items) == 2:

# This is a header row giving the shape of the matrix

continue

labels.append(items[0])

values = np.array([float(x) for x in items[1:]], 'f')

rows.append(values)

arr = np.vstack(rows)

return pd.DataFrame(arr, index=labels, dtype='f')

embeddings = load_embeddings('data/glove.42B.300d.txt')

embeddings.shape

embeddings[100:115]

We need some input about which words are positive and which words are negative.

We download the lexicon from Bing Liu's web site (https://www.cs.uic.edu/~liub/FBS/sentiment-analysis.html#lexicon) and extract it into data/positive-words.txt and data/negative-words.txt.

Next we define how to read these files, and read them in as the pos_words and neg_words variables:

def load_lexicon(filename):

"""

Load a file from Bing Liu's sentiment lexicon

(https://www.cs.uic.edu/~liub/FBS/sentiment-analysis.html), containing

English words in Latin-1 encoding.

One file contains a list of positive words, and the other contains

a list of negative words. The files contain comment lines starting

with ';' and blank lines, which should be skipped.

"""

lexicon = []

with open(filename, encoding='latin-1') as infile:

for line in infile:

line = line.rstrip()

if line and not line.startswith(';'):

lexicon.append(line)

return lexicon

pos_words = load_lexicon('data/positive-words.txt')

neg_words = load_lexicon('data/negative-words.txt')

Now we get their embedding vector from embedding matrix

pos_vectors = embeddings.loc[pos_words].dropna()

neg_vectors = embeddings.loc[neg_words].dropna()

vectors = pd.concat([pos_vectors, neg_vectors])

targets = np.array([1 for entry in pos_vectors.index] + [-1 for entry in neg_vectors.index])

# we used 1 for positive words and -1 for negative words.

labels = list(pos_vectors.index) + list(neg_vectors.index)

# we have 6616 words

vectors.shape

Using the scikit-learn train_test_split function, we simultaneously separate the input vectors, output values, and labels into training and test data, with 10% of the data used for testing.

train_vectors, test_vectors, train_targets, test_targets, train_labels, test_labels = \

train_test_split(vectors, targets, labels, test_size=0.1, random_state=0)

model = SGDClassifier(loss='log', random_state=0, n_iter=150)

model.fit(train_vectors, train_targets)

accuracy_score(model.predict(test_vectors), test_targets)

Let's define a function that we can use to see the sentiment that this classifier predicts for particular words, then use it to see some examples of its predictions on the test data.

def vecs_to_sentiment(vecs):

# predict_log_proba gives the log probability for each class

predictions = model.predict_log_proba(vecs)

# To see an overall positive vs. negative classification in one number,

# we take the log probability of positive sentiment minus the log

# probability of negative sentiment.

return predictions[:, 1] - predictions[:, 0]

def words_to_sentiment(words):

vecs = embeddings.loc[words].dropna()

log_odds = vecs_to_sentiment(vecs)

return pd.DataFrame({'sentiment': log_odds}, index=vecs.index)

# Show 20 examples from the test set

words_to_sentiment(test_labels).iloc[300:320]

More than the accuracy number, this convinces us that the classifier is working. We can see that the classifier has learned to generalize sentiment to words outside of its training data.

import re

TOKEN_RE = re.compile(r"\w.*?\b")

# The regex above finds tokens that start with a word-like character (\w), and continues

# matching characters (.+?) until the next word break (\b). It's a relatively simple

# expression that manages to extract something very much like words from text.

def text_to_sentiment(text):

tokens = [token.casefold() for token in TOKEN_RE.findall(text)]

sentiments = words_to_sentiment(tokens)

return sentiments['sentiment'].mean()

We can average words sentiment scores to get the sentiment for a sentence.

text_to_sentiment("this example is brilliant")

text_to_sentiment("The weather is good today")

text_to_sentiment("I love you")

text_to_sentiment("I hate you")

Seems like we got good results!......But what about the following exmample

text_to_sentiment("The weather is not good today")

text_to_sentiment("This project is not perfect")

Besides, this classifier also has strong bias!

text_to_sentiment("Let's go get Italian food")

text_to_sentiment("Let's go get Chinese food")

text_to_sentiment("Let's go get Mexican food")

Word embedding was greatly affected by the training data...and the traditional way to do sentiment analysis was not considering the content.... To deal with the first problem, we could use the new "ConceptNet" http://www.conceptnet.io/

And the existing deep learning model like LSTM could capture the content

3.Semantic analysis based on deep learning¶

In this section, we will use the dataset from https://www.kaggle.com/bittlingmayer/amazonreviews

This dataset consists of a few million Amazon customer reviews (input text) and star ratings (0-negative 1 -positive) for learning how to train fastText for sentiment analysis.

Let's do a brief review about LSTM.

Image(url="http://colah.github.io/posts/2015-08-Understanding-LSTMs/img/LSTM3-chain.png", width=700)

Image(url="http://colah.github.io/posts/2015-08-Understanding-LSTMs/img/LSTM2-notation.png", width=600)

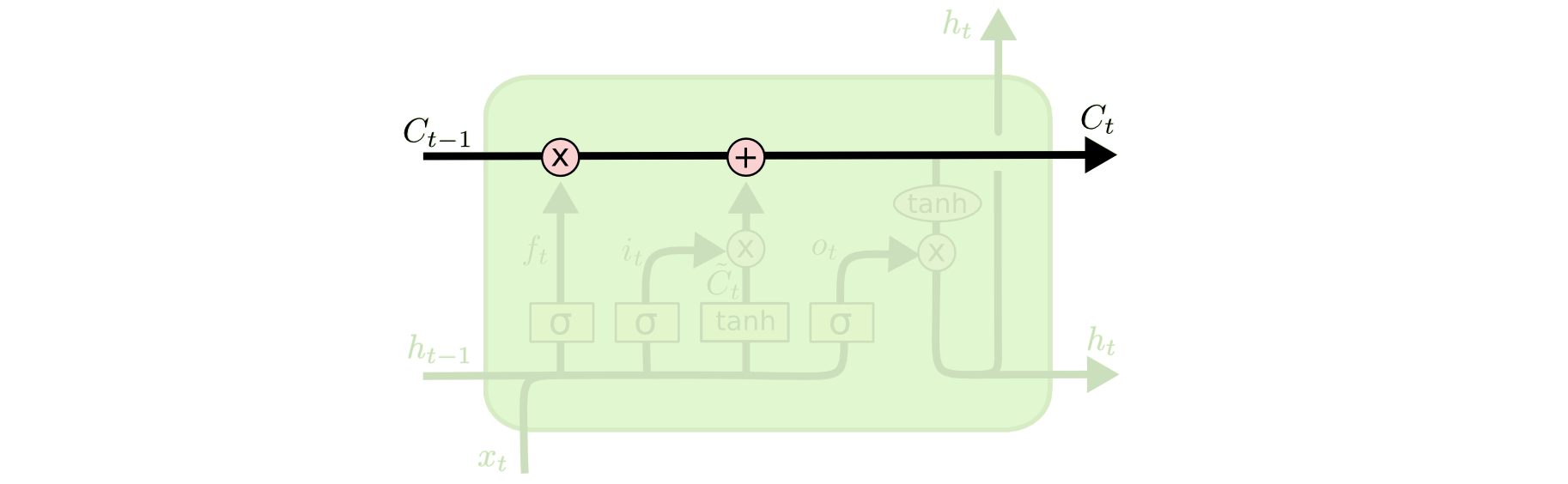

Step-by-Step LSTM Walk Through¶

The key to LSTMs is how to control the cell state, the horizontal line running through the top of the diagram.

Image(url="http://colah.github.io/posts/2015-08-Understanding-LSTMs/img/LSTM3-C-line.png", width=600)

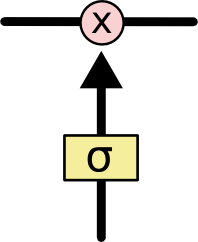

Here, the idea of LSTM is to use three gates to protect and control the cell state. It is a way to optionally let information through. They are composed out of a sigmoid neural net layer and a pointwise multiplication operation.

Image(url="http://colah.github.io/posts/2015-08-Understanding-LSTMs/img/LSTM3-gate.png", width=100)

the most important idea you need to understand in LSTM is: using sigmoid layer as a gate¶

The sigmoid layer outputs numbers between zero and one, describing how much of each component should be let through. A value of zero means “let nothing through,” while a value of one means “let everything through!”

The name of three gates are:

forget gate

input gate

ourput gate

Image(url="http://colah.github.io/posts/2015-08-Understanding-LSTMs/img/LSTM3-focus-f.png", width=800)

The first step in our LSTM is to decide what information we’re going to throw away from the cell state. This decision is made by a sigmoid layer called the “forget gate layer.” It looks at $h_{t−1}$ and $x_t$, and outputs a number between 0 and 1 for each number in the cell state $C_{t−1}$. 1 represents “completely keep this” while a 0 represents “completely get rid of this.”

$f_t$=[0, 0, 0.5 ,1, 0.9]

Image(url="http://colah.github.io/posts/2015-08-Understanding-LSTMs/img/LSTM3-focus-i.png", width=800)

The next step is to decide what new information we’re going to store in the cell state. This has two parts.

First, a sigmoid layer called the “input gate layer” decides which values(or how much) we’ll update. We got $i_t$

Next, a tanh layer creates a vector of new candidate values, $\tilde{C}_t$, that could be added to the state.

$i_t * \tilde{C}_t $ means how much we will update the current cell state

It’s now time to update the old cell state, $C_{t−1}$, into the new cell state $ C_t$. The previous steps already decided what to do, we just need to actually do it.

Image(url="http://colah.github.io/posts/2015-08-Understanding-LSTMs/img/LSTM3-focus-C.png", width=800)

This equation means: forgetting the things we decided to forget earlier: $f_t * C_{t-1}$

plus

the new candidate values, scaled by how much we decided to update each state value. $ i_t *\tilde{C}_t $

Image(url="http://colah.github.io/posts/2015-08-Understanding-LSTMs/img/LSTM3-focus-o.png", width=800)

Finally, we need to decide what we’re going to output. This output will be based on our cell state, but will be a filtered version. First, we run a sigmoid layer which decides what parts of the cell state we’re going to output. Then, we put the cell state through tanh (to push the values to be between −1 and 1) and multiply it by the output of the sigmoid gate, so that we only output the parts we decided to.

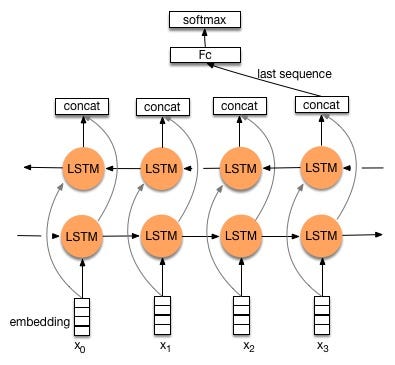

After understand LSTM, we will use a variants version called bidirectional LSTM. Because, using bidirectional will run your inputs in two ways, one from past to future and one from future to past. So you could preserve information from both past and future.

Image(url="https://cdn-images-1.medium.com/max/800/1*GRQ91HNASB7MAJPTTlVvfw.jpeg", width=500)

from keras.models import Model, Sequential

from keras.layers import Dense, Embedding, Input, Conv1D, Activation,GlobalMaxPool1D, Dropout, concatenate, GlobalAveragePooling1D,Layer, InputSpec, CuDNNLSTM,BatchNormalization

from keras.preprocessing import text, sequence

from keras.callbacks import EarlyStopping, ModelCheckpoint

from keras import backend as K

from keras import activations, initializers, regularizers, constraints

from keras.utils.conv_utils import conv_output_length

from keras.regularizers import l2

from keras.constraints import maxnorm

import numpy as np

import pandas as pd

import bz2

import gc

import chardet

import re

import os

train_file = bz2.BZ2File('data/train.ft.txt.bz2')

test_file = bz2.BZ2File('data/test.ft.txt.bz2')

train_file_lines = train_file.readlines()

test_file_lines = test_file.readlines()

del train_file, test_file

Convert from raw binary strings to strings that can be parsed

train_file_lines = [x.decode('utf-8') for x in train_file_lines]

test_file_lines = [x.decode('utf-8') for x in test_file_lines]

train_labels = [0 if x.split(' ')[0] == '__label__1' else 1 for x in train_file_lines]

train_sentences = [x.split(' ', 1)[1][:-1].lower() for x in train_file_lines]

for i in range(len(train_sentences)):

train_sentences[i] = re.sub('\d','0',train_sentences[i])

test_labels = [0 if x.split(' ')[0] == '__label__1' else 1 for x in test_file_lines]

test_sentences = [x.split(' ', 1)[1][:-1].lower() for x in test_file_lines]

for i in range(len(test_sentences)):

test_sentences[i] = re.sub('\d','0',test_sentences[i])

for i in range(len(train_sentences)):

if 'www.' in train_sentences[i] or 'http:' in train_sentences[i] or 'https:' in train_sentences[i] or '.com' in train_sentences[i]:

train_sentences[i] = re.sub(r"([^ ]+(?<=\.[a-z]{3}))", "<url>", train_sentences[i])

for i in range(len(test_sentences)):

if 'www.' in test_sentences[i] or 'http:' in test_sentences[i] or 'https:' in test_sentences[i] or '.com' in test_sentences[i]:

test_sentences[i] = re.sub(r"([^ ]+(?<=\.[a-z]{3}))", "<url>", test_sentences[i])

del train_file_lines, test_file_lines

print('We have',len(train_sentences),'sentences in training set')

print('We have',len(test_sentences),'sentences in test set')

# Creates a tokenizer, configured to only take into account the 1,0000 most common words

max_features = 10000

# Cuts off the text after this number of words (among the max_features most ommon words)

maxlen = 128

# we used glove embedding

embed_size = 300

tokenizer = text.Tokenizer(num_words=max_features)

tokenizer.fit_on_texts(train_sentences)

tokenized_train = tokenizer.texts_to_sequences(train_sentences)

#Turns the lists of integers into a 2D integer tensor of shape (samples, maxlen)

X_train = sequence.pad_sequences(tokenized_train, maxlen=maxlen)

tokenized_test = tokenizer.texts_to_sequences(test_sentences)

X_test = sequence.pad_sequences(tokenized_test, maxlen=maxlen)

# # save them to save time

# np.save('X_train.npy', X_train)

# np.save('X_test.npy', X_test)

print(X_train.shape)

print(X_test.shape)

word_index = tokenizer.word_index

print('Found %s unique tokens.' % len(word_index))

# X_train = np.load('X_train.npy')

# X_test = np.load('X_test.npy')

del embeddings

# EMBEDDING_FILE='data/glove.42B.300d.txt'

Preparing the Embedding layer¶

https://blog.keras.io/using-pre-trained-word-embeddings-in-a-keras-model.html

Next, we compute an index mapping words to known embeddings, by parsing the data dump of pre-trained embeddings:

# embeddings_index = {}

# f = open('data/glove.42B.300d.txt',encoding='utf-8')

# for line in f:

# values = line.split()

# word = values[0]

# coefs = np.asarray(values[1:], dtype='float32')

# embeddings_index[word] = coefs

# f.close()

# print('Found %s word vectors.' % len(embeddings_index))

# embeddings_index['apple'].shape

At this point we can leverage our embedding_index dictionary and our word_index to compute our embedding matrix:

EMBEDDING_DIM=64

# embedding_matrix = np.zeros((len(word_index) + 1, EMBEDDING_DIM))

# for word, i in word_index.items():

# embedding_vector = embeddings_index.get(word)

# if embedding_vector is not None:

# # words not found in embedding index will be all-zeros.

# embedding_matrix[i] = embedding_vector

# embedding_matrix.shape

We load this embedding matrix into an Embedding layer. Note that we set trainable=False to prevent the weights from being updated during training.

from keras.layers import Embedding,Bidirectional,LSTM

# embedding_layer = Embedding(len(word_index) + 1,

# EMBEDDING_DIM,

# weights=[embedding_matrix],

# input_length=maxlen,

# trainable=False)

def cudnnlstm_model(conv_layers = 2, max_dilation_rate = 3):

inp = Input(shape=(maxlen, ))

######## load this embedding matrix

x = Embedding(len(word_index) + 1,

EMBEDDING_DIM,

# weights=[embedding_matrix],

input_length=maxlen,

trainable=True

)(inp)

x = BatchNormalization()(x)

x = Bidirectional(CuDNNLSTM(512, return_sequences=True))(x)

x = BatchNormalization()(x)

x =Bidirectional(CuDNNLSTM(256))(x)

x = BatchNormalization()(x)

x = Dense(128, activation="relu")(x)

x = Dropout(0.5)(x)

x = Dense(1, activation="sigmoid")(x)

model = Model(inputs=inp, outputs=x)

model.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['binary_accuracy'])

return model

cudnnlstm_model = cudnnlstm_model()

cudnnlstm_model.summary()

weight_path="early_weights.hdf5"

checkpoint = ModelCheckpoint(weight_path, monitor='val_loss', verbose=1, save_best_only=True, mode='min')

early_stopping = EarlyStopping(monitor="val_loss", mode="min", patience=5)

callbacks = [checkpoint, early_stopping]

batch_size = 1024

epochs = 5

cudnnlstm_model.fit(X_train, train_labels,

batch_size=batch_size,

epochs=epochs,

shuffle = True, validation_split=0.10,

callbacks=callbacks)

.....40 mins per Epoch

cudnnlstm_model.load_weights(weight_path)

score, acc = cudnnlstm_model.evaluate(X_test, test_labels, batch_size=batch_size)

print('Test score:', score)

print('Test accuracy:', acc)

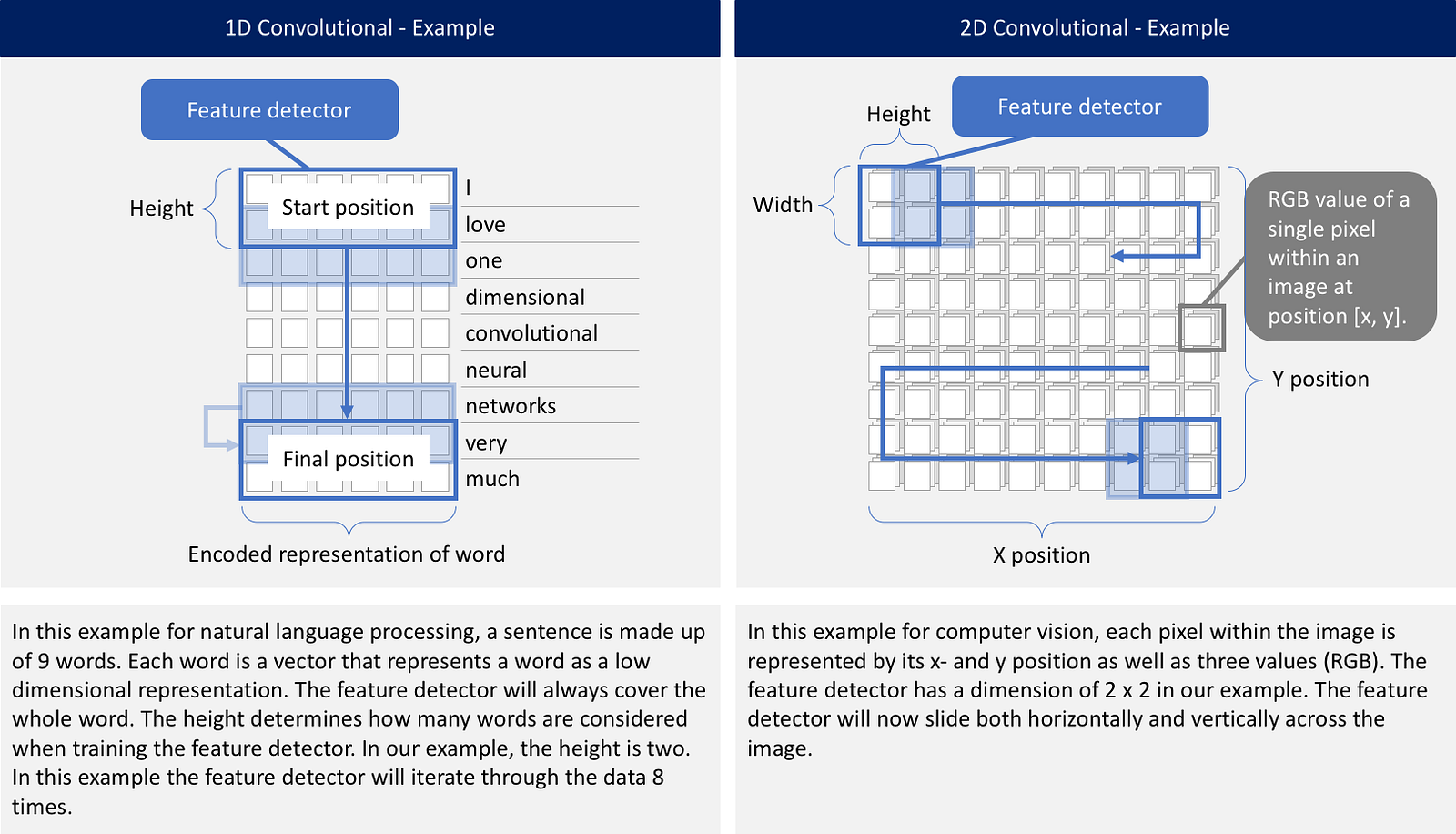

Sequence processing with 1D-CNN¶

Image(url="https://cdn-images-1.medium.com/max/1600/1*aBN2Ir7y2E-t2AbekOtEIw.png", width=900)

input = Input(shape=(maxlen,))

net = Embedding(max_features, 64)(input)

net = Dropout(0.2)(net)

net = BatchNormalization()(net)

net = Conv1D(32, 7, padding='same', activation='relu')(net)

net = BatchNormalization()(net)

net = Conv1D(32, 3, padding='same', activation='relu')(net)

net = BatchNormalization()(net)

net = Conv1D(32, 3, padding='same', activation='relu')(net)

net = BatchNormalization()(net)

net = Conv1D(32, 3, padding='same', activation='relu')(net)

net = BatchNormalization()(net)

net = Conv1D(2, 1)(net)

net = GlobalAveragePooling1D()(net)

net = Dense(1, activation="sigmoid")(net)

output =net

model = Model(inputs = input, outputs = output)

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['acc'])

model.summary()

weight_path="cnn1d_weights.hdf5"

checkpoint = ModelCheckpoint(weight_path, monitor='val_loss', verbose=1, save_best_only=True, mode='min')

early_stopping = EarlyStopping(monitor="val_loss", mode="min", patience=2)

callbacks = [checkpoint, early_stopping]

model.fit(X_train, train_labels, batch_size=2048, epochs=5, validation_split=0.1,callbacks=callbacks)

model.load_weights(weight_path)

score, acc = model.evaluate(X_test, test_labels, batch_size=1024)

print('Test score:', score)

print('Test accuracy:', acc)

Using 1-D convolution, we achieve almost the same accuracy compared with LSTM (94.7% vs 95.4%). But the training time was reduced a lot! (2415/epoch vs 72s/epoch)

Finished! Questions?